The only way I got the script to work was by getting these environ variables defined before running an HTTP request: os. 8):-D, -daemon Daemonize instead of running in the foreground. This procedure assumes familiarity with Docker and Docker Compose.

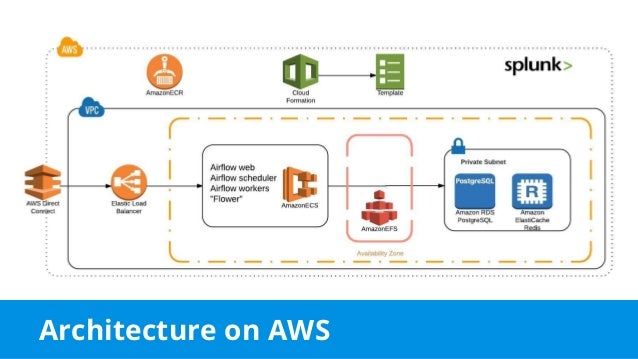

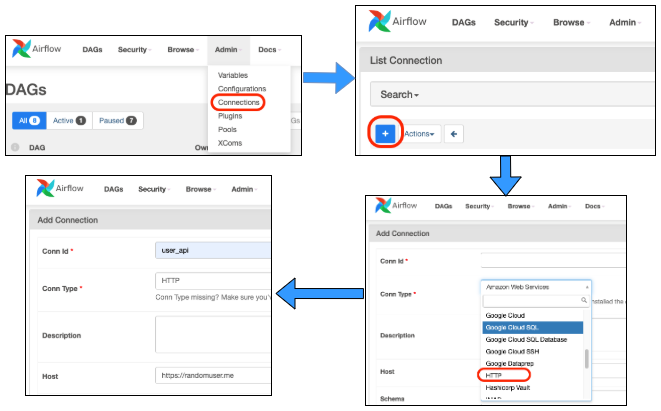

I have configured airflow with mysql metadb with local executer. In this article, DAG sharing between Web Server, Scheduler, and Kubernetes Executor was solved using S3, which is relatively easy to use, and the duplication of Web Server and Scheduler was also configured using Load Balance. For information about creating an Astro project, see If your apache-airflow installation requires redis or rabbitmq services than you can specify the dependency here EnvironmentFile : it specifies the file where service will find its environment variables User: set your actual username here, the specified userid will be used to invoke the service ExecStart : here you can specify the command to be … AIRFLOW_CORE_FERNET_KEY=some value AIRFLOW_WEBSERVER_SECRET_KEY=some value The setup itself seems to be working as confirmed by an exposed config in the webserver GUI: link to a screenshot. 2 version in airflow webserver, still iam seeing the 3DES cipher warning and broken RC4. service rabbitmq Setting up Airflow on AWS Linux was not direct, because of outdated default packages. When nonzero, airflow periodically refreshes webserver workers by # bringing up new ones and killing old ones. Everything was working working before yesterday (3/23) and this issue is fairly recent. Here is my config file definition: #airflow-webserver.

As an additional troubleshooting step, i tried running "airflow webserver" as the user like this: sudo -s -u airflow webserver. 15 airflow webserver starting - gunicorn workers shutting down.

So far I've installed docker and docker-compose then followed the puckel/docker-airflow readme, skipping the optional build, then tried to run the container by: docker run -d -p 8080:8080 puckel/docker-airflow webserver. I then stop I am able to run airflow test tutorial print_date as per the tutorial docs successfully - the dag runs, and moreover the print_double succeeds. I have set up airflow on an Ubuntu server. 7) running on machine (CentOS 7) for long time. Using Airflow in a … Airflow has a very rich command line interface that allows for many types of operation on a DAG, starting services, and supporting development and testing. Last heartbeat was received 5 minutes ago. I don't understand why, since the webserver should look in … I would like to run the scheduler as a daemon process with. Run the following two commands to run the Airflow webserver and scheduler in the daemon mode: airflow webserver -D airflow scheduler -D Image 8 - Start Airflow and Scheduler (image by author) Let’s make sure our OS is up-to-date. cfg after you save the file, you shall run airflow db init and start airflow webserver again airflow webserver -D. Starting and Managing the Apache Airflow Unit Files. ResolutionError: No such revision or branch '449b4072c2da' seems to refer to an existing alembic revision number. As of yet there is no resolution to this problem.Airflow webserver daemon. It is unclear whether this directly causes the crashes that some people are seeing or whether this is just an annoying cosmetic log. There is some activity with others saying they see the same issue. I found the following issue in the Airflow Jira: How do I resolve the database connection errors? Is there a setting to increase the number of database connections, if so where is it? Do I need to handle the workers differently?Įven with no workers running, starting the webserver and scheduler fresh, when the scheduler fills up the airflow pools the DB connection warning starts to appear. I run three commands, airflow webserver, airflow scheduler, and airflow worker, so there should only be one worker and I don't see why that would overload the database. I'm using CeleryExecutor and I'm thinking that maybe the number of workers is overloading the database connections. I've tried to increase the SQL Alchemy pool size setting in airflow.cfg but that had no effect # The SqlAlchemy pool size is the maximum number of database connections in the pool. Reconnecting.Įventually, I'll also get this error FATAL: remaining connection slots are reserved for non-replication superuser connections The following warning shows up where it didn't before WARNING - DB connection invalidated. The console also indicates that tasks are being scheduled but if I check the database nothing is ever being written. A bunch of these errors show up in a row. I am upgrading our Airflow instance from 1.9 to 1.10.3 and whenever the scheduler runs now I get a warning that the database connection has been invalidated and it's trying to reconnect.

0 kommentar(er)

0 kommentar(er)